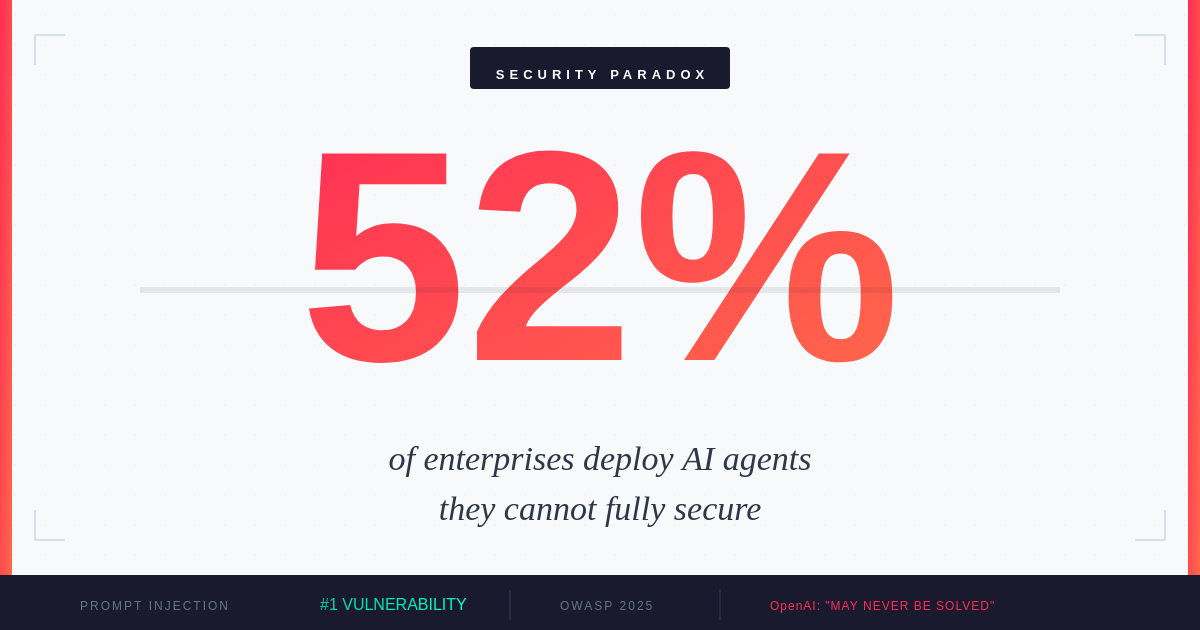

The Agentic Security Paradox: Why 52% of Enterprises Deploy AI Agents They Can't Fully Secure

AI agents power enterprise automation but create vulnerabilities. The capabilities that make them powerful also make full security impossible.

The Year of Agentic AI

2025 has been declared the year of agentic AI. According to IBM's survey of 1,000 enterprise developers1, 99% are now exploring or actively developing AI agents. Gartner reports that 45% of enterprises2 run at least one production AI agent with access to critical business systems - a 300% increase from 2023. Google Cloud's ROI of AI 2025 Report reveals that 52% of enterprises using generative AI now deploy agents in production, with 88% of early adopters reporting tangible ROI.

But beneath this adoption surge lies an uncomfortable truth: these agents operate in a security landscape that even their creators acknowledge may never be fully secured.

Enterprise AI Agent Deployment Growth (2023-2025)

The Fundamental Paradox

In December 2025, OpenAI made a startling admission3: prompt injection attacks on AI agents 'may never be fully solved.' This acknowledgment came just as enterprises were scaling their most ambitious agentic deployments. The timing could not be worse - or more revealing.

The core problem is architectural. As UCL's George Chalhoub explains, prompt injection 'collapses the boundary between data and instructions.' When an AI agent browses the web, reads emails, or processes documents, it cannot reliably distinguish between legitimate content and hidden commands designed to hijack its behavior. A malicious instruction embedded in a webpage or email can turn a helpful assistant into an attack vector.

OpenAI was direct about the limits4: 'The nature of prompt injection makes deterministic security guarantees challenging.' This admission arrives precisely as enterprises move from copilots to autonomous agents - when theoretical risks become operational realities.

The 2025 Attack Landscape

According to OWASP's 2025 Top 10 for LLM Applications5, prompt injection ranks as the number one critical vulnerability, appearing in over 73% of production AI deployments assessed during security audits. The threat is not theoretical - it's active and evolving.

AI Agent Attack Vectors in 2025

Q4 2025 data from security researchers6 shows that attacks targeting AI agents have grown increasingly sophisticated. In one documented case, researchers demonstrated a prompt injection attack against a major enterprise RAG system. By embedding malicious instructions in a publicly accessible document, they caused the AI to leak proprietary business intelligence, modify its own system prompts to disable safety filters, and execute API calls with elevated privileges.

CrowdStrike's analysis of indirect prompt injection7 reveals the hidden danger: attackers don't need direct access to your AI systems. They can poison the content your agents consume - websites, documents, emails - and wait for your own systems to execute the attack from within.

The Defense Gap

Despite the escalating threat, only 34.7% of organizations8 are running dedicated defenses against prompt injection. The majority rely on default safeguards and policy documents rather than purpose-built protections.

AI Agent Security Controls Adoption Rate

This gap exists for structural reasons. Traditional security frameworks weren't designed for systems where the attack surface is language itself. Firewalls can't filter malicious prompts hidden in legitimate-looking text. Antivirus can't scan for instructions that only become harmful when interpreted by an AI.

MIT Sloan Management Review notes9 that organizations are 'weighing the risks of delegating decision-making to AI at a time when no regulatory frameworks specific to agentic AI exist.' Current rules address general AI safety, bias, privacy, and explainability - but gaps remain for autonomous systems that can take real-world actions.

Regional Security Maturity

Security preparedness varies significantly by region, creating uneven risk profiles for global enterprises.

AI Security Maturity by Region (2025)

Central and Eastern Europe shows particularly concerning numbers. With only 24% of enterprises running dedicated AI security controls, the region's rapid AI adoption may be outpacing its security infrastructure. This creates opportunities for attackers and regulatory challenges as the EU AI Act's requirements begin to take effect.

The disparity isn't just about investment - it reflects different regulatory pressures, talent availability, and organizational awareness of AI-specific threats. Companies operating across regions must account for these variations in their security posture.

The 10x Damage Potential

What makes AI agent security different from traditional application security is the amplification factor. As one executive quoted in security research noted: 'These agents can do 10x more damage in 1/10th of the time.'

The math is simple but alarming. An AI agent with access to customer data, internal systems, and external APIs can execute actions at machine speed. A compromised agent doesn't need to slowly exfiltrate data - it can dump databases, send emails, modify records, and cover its tracks in seconds. The same autonomy that makes agents valuable makes them dangerous when turned hostile.

As enterprises accelerate toward autonomous workflows10, the urgent need for robust AI agent governance has become a critical operational mandate. Security leaders must extend controls across the full agent interaction chain, treating every external content source as potentially adversarial.

Practical Defense Strategies

While perfect security remains elusive, organizations can significantly reduce their risk exposure through layered defenses.

AWS recommends11 implementing robust content filtering and moderation mechanisms. Amazon Bedrock Guardrails can filter harmful content, block denied topics, and redact sensitive information such as PII. But technical controls alone aren't sufficient.

OpenAI's approach to hardening ChatGPT Atlas12 demonstrates the ongoing nature of this challenge. Their strategy involves continuous monitoring, rapid response to new attack vectors, and treating security as an iterative process rather than a solved problem.

Key defensive measures include: separating read and write permissions for AI agents, requiring explicit human confirmation for high-risk actions, validating and sanitizing all external content before agents ingest it, implementing real-time behavioral monitoring to detect anomalous agent actions, and maintaining comprehensive audit logs of all agent decisions and actions.

Implications for Central and Eastern Europe

For enterprises in Central and Eastern Europe, the agentic security challenge arrives at a critical moment. The region is experiencing rapid AI adoption, with companies eager to leverage automation for competitive advantage. But this enthusiasm may be creating blind spots.

The EU AI Act, now in effect, requires organizations to implement appropriate technical and organizational measures for AI systems. For high-risk AI agents with access to critical systems, this means documented risk assessments, human oversight mechanisms, and transparent decision-making processes. Companies that haven't addressed the security fundamentals may find themselves exposed - both to attackers and to regulators.

The Stanford AI Index 202513 notes that global regulatory activity around AI has increased dramatically, with legislative mentions of AI rising 21.3% across 75 countries since 2023. CEE enterprises operating in multiple jurisdictions must navigate an increasingly complex compliance landscape while simultaneously defending against novel attack vectors.

Living with the Paradox

The agentic security paradox won't be resolved by a breakthrough technology or a regulatory mandate. It represents a fundamental tension in how we build and deploy AI systems. The features that make agents useful - their ability to understand context, take actions, and adapt to new situations - are precisely what makes them vulnerable to manipulation.

Deloitte's 2025 AI trends analysis14 suggests that successful enterprises will be those that embrace this tension rather than ignore it. This means accepting that perfect security is impossible, implementing defense-in-depth strategies, maintaining human oversight for critical decisions, and building organizational cultures that treat AI security as everyone's responsibility.

The 52% of enterprises now running AI agents in production face a choice: slow down until security catches up, or move forward with eyes open to the risks. Most are choosing the latter. The question is whether their security strategies will evolve as fast as the agents they're deploying.

For AI to reach its transformative potential, we must learn to operate in a world where our most powerful tools are also our most vulnerable. That's not a problem to solve - it's a condition to manage.

Sources

- ↑ AI Agents in 2025: Expectations vs. Reality - IBM

- ↑ The 2025 AI Agent Security Landscape - Obsidian Security

- ↑ OpenAI says AI browsers may always be vulnerable to prompt injection attacks

- ↑ OpenAI admits prompt injection is here to stay - VentureBeat

- ↑ LLM01:2025 Prompt Injection - OWASP

- ↑ AI Agent Attacks in Q4 2025 Signal New Risks for 2026

- ↑ Indirect Prompt Injection Attacks: Hidden AI Risks - CrowdStrike

- ↑ Prompt Injection Attacks: The Most Common AI Exploit in 2025

- ↑ The Emerging Agentic Enterprise - MIT Sloan Management Review

- ↑ AI agent governance is the new resilience mandate - SiliconANGLE

- ↑ Safeguard your generative AI workloads from prompt injections - AWS

- ↑ Continuously hardening ChatGPT Atlas against prompt injection attacks - OpenAI

- ↑ The 2025 AI Index Report - Stanford HAI

- ↑ AI trends 2025: Adoption barriers and updated predictions - Deloitte